Cluster Overview#

The platform team currently maintains several Kubernetes clusters to support the development and operations of the platform. This page describes those clusters and the different use cases for each.

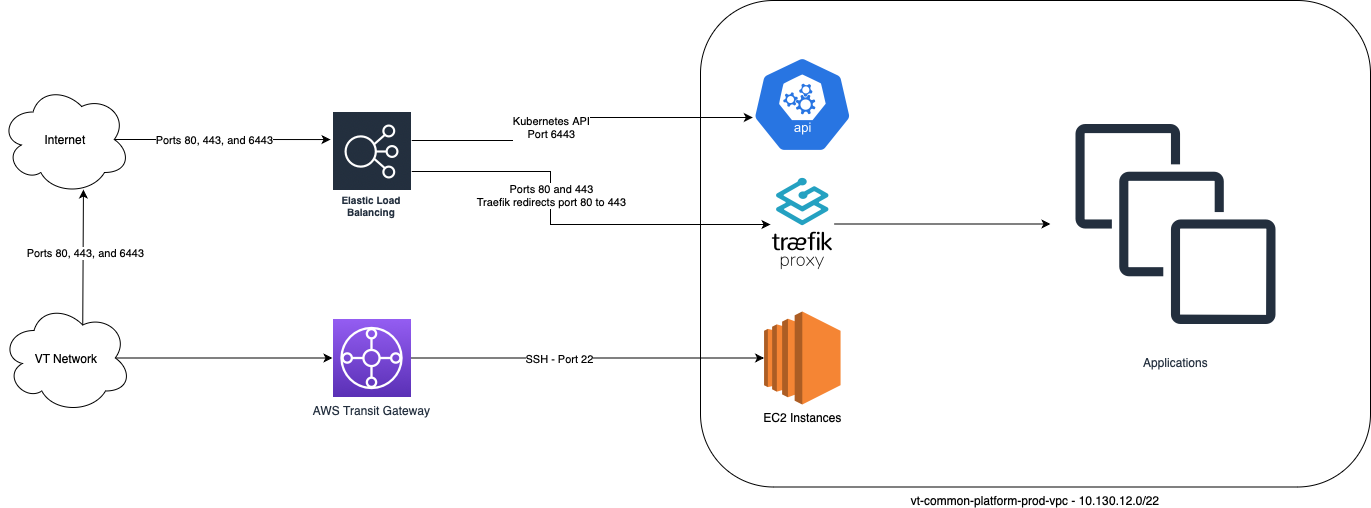

AWS EKS Environment#

All clusters running in AWS leverage EKS to run the Kubernetes control plane. This is the default location for all of our tenant workloads.

Load Balancers#

The AWS Clusters use an AWS Loadbalancer provisioned by the ingress created by the traefik helm chart.

Networking#

The AWS Clusters utilize Cilium which runs on top of the three vpcs, one per AZ. By default, EKS clusters run Amazon's VPC CNI, is uninstalled and replaced with Cilium.

Storage#

EBS#

Elastic Block Storage is the default storage class on the cluster.

EFS#

EFS is currently provisioned as part of the cluster code and thus has to be added per customer requesting it.

S3#

S3 Storage is not currently surfaced to customers as part of the cluster. It is used by the platform in various ways to store information to support internal infrastructure.

Nothing prohibits customers from referencing outside S3, but at the moment, we do not provide an object storage class.

Logging#

Splunk#

There is a daemonset of Filebeat running on all the nodes collecting logs from the systems and pods and forwarding them into Splunk.

Cloudwatch#

Additional logging for Amazon components is available in Cloudwatch. Cloudwatch logs are not currently exported to Splunk.

DVLP cluster#

- Purpose: Develpoment and verification of new or updated capabilities.

- Access permissions: All platform admins have both read and write access. However, write access is intended to be used for experimentation. Long-term or persistent changes should be made through code and applied via pipelines.

- Links to know:

- Cluster base name:

dvlp.aws.itcp.cloud.vt.edu - Alertmanager: https://alertmanager.dvlp.aws.itcp.cloud.vt.edu

- Grafana: https://grafana.dvlp.aws.itcp.cloud.vt.edu

- Headlamp: https://headlamp.dvlp.aws.itcp.cloud.vt.edu/

- Hubble: https://hubble.dvlp.aws.itcp.cloud.vt.edu

- Kubecost: https://kubecost.dvlp.aws.itcp.cloud.vt.edu

- Prometheus: https://prometheus.dvlp.aws.itcp.cloud.vt.edu

- Traefik Dashboard: https://traefik.dvlp.aws.itcp.cloud.vt.edu

- Cluster base name:

PPRD cluster#

- Purpose: A place for clients to test changes staged to be released in production.

- Access permissions: All platform admins have read access by default. Temporary write-access can be granted by putting a user into the correct ED group.

- Links to know:

- Cluster base name:

pprd.aws.itcp.cloud.vt.edu - Alertmanager: https://alertmanager.pprd.aws.itcp.cloud.vt.edu

- Grafana: https://grafana.pprd.aws.itcp.cloud.vt.edu

- Headlamp: https://headlamp.pprd.aws.itcp.cloud.vt.edu/

- Hubble: https://hubble.pprd.aws.itcp.cloud.vt.edu

- Kubecost: https://kubecost.pprd.aws.itcp.cloud.vt.edu

- Prometheus: https://prometheus.pprd.aws.itcp.cloud.vt.edu

- Traefik Dashboard: https://traefik.pprd.aws.itcp.cloud.vt.edu

- Cluster base name:

PROD cluster#

- Purpose: Running of all tenant workloads including their various tiers namespaced separately.

- Access permissions: All platform admins have read access by default. Temporary write-access can be granted by putting a user into the correct ED group.

- Links to know:

- Cluster base name:

prod.aws.itcp.cloud.vt.edu - Cluster alias:

platform.it.vt.eduand*.platform.it.vt.edu - Alertmanager: https://alertmanager.prod.aws.itcp.cloud.vt.edu

- Grafana: https://grafana.prod.aws.itcp.cloud.vt.edu

- Headlamp: https://headlamp.prod.aws.itcp.cloud.vt.edu/

- Hubble: https://hubble.prod.aws.itcp.cloud.vt.edu

- Kubecost: https://kubecost.prod.aws.itcp.cloud.vt.edu

- Prometheus: https://prometheus.prod.aws.itcp.cloud.vt.edu

- Traefik Dashboard: https://traefik.prod.aws.itcp.cloud.vt.edu

- Cluster base name:

Configuring local CLI for admin access#

See Running Terraform Locally for now. We will eventually have the same SSO setup for both baseline and non-baseline clusters.

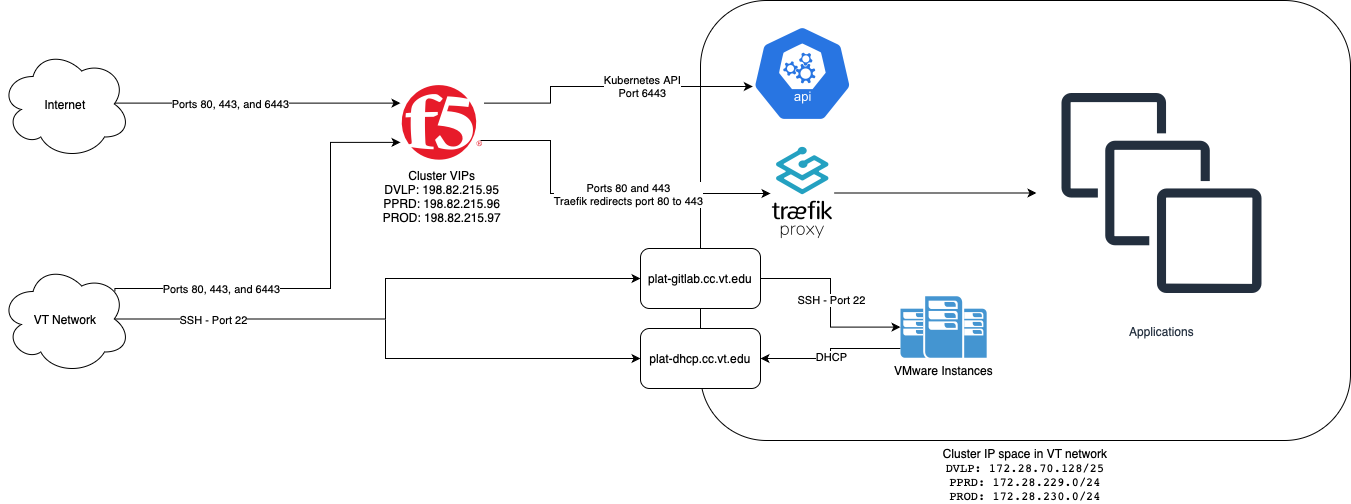

On-prem EKS-A Environment#

All clusters running on-prem leverage EKS-A to run the Kubernetes control plane. On-prem resources are limited by the amount of hardware we can dedicate to it.

Load Balancers#

The EKS-A On Premesis clusters reside in a private subnet behind the F5 which exposes Traefik for hosted application.

Node Routing

Outbound traffic from the nodes behind the F5 to the internet get SNATed with our public address of the F5. However, if the IP is local to VT's network, it will be routed using its private address.

Networking#

The EKS Anywhere natively utilize Cilium which the team has left in place. Current procedure it to allow the version to float with the EKS Anywhere lifecycle management tools.

Storage#

vSAN#

vSAN is the default storage class on the cluster. vSAN allows the hypervisors in a cluster to form a virtual SAN with their resources, reducing cost and keeping storage nearby. vSAN is enabled with a CSI Driver.

NFS#

NFS is currently provisioned as part of the landlord. Our current implementation of NFS is supported by the NFS CSI Driver.

Object Storage#

Object storage is not currently supported in our On-Premesis EKS-A cluster.

Logging#

Splunk#

There is a daemonset of Filebeat running on all the nodes collecting logs from the systems and pods and forwarding them into Splunk.

DVLP cluster#

- Purpose: Develpoment and verification of new or updated capabilities

- Access permissions: All platform admins have both read and write access. However, write access is intended to be used for experimentation. Long-term or persistent changes should be made through code and applied via pipelines.

- Links to know:

- Cluster base name:

dvlp.op.itcp.cloud.vt.edu - Alertmanager: https://alertmanager.dvlp.op.itcp.cloud.vt.edu

- Grafana: https://grafana.dvlp.op.itcp.cloud.vt.edu

- Headlamp: https://headlamp.dvlp.op.itcp.cloud.vt.edu/

- Kubecost: https://kubecost.dvlp.op.itcp.cloud.vt.edu

- Prometheus: https://prometheus.dvlp.op.itcp.cloud.vt.edu

- Traefik Dashboard: https://traefik.dvlp.op.itcp.cloud.vt.edu

- Cluster base name:

PPRD cluster#

- Purpose: A place for clients to test changes staged to be released in production.

- Access permissions: All platform admins have read access by default. Temporary write-access can be granted by putting a user into the correct ED group.

- Links to know:

- Cluster base name:

pprd.op.itcp.cloud.vt.edu - Alertmanager: https://alertmanager.pprd.op.itcp.cloud.vt.edu

- Grafana: https://grafana.pprd.op.itcp.cloud.vt.edu

- Headlamp: https://headlamp.pprd.op.itcp.cloud.vt.edu/

- Kubecost: https://kubecost.pprd.op.itcp.cloud.vt.edu

- Prometheus: https://prometheus.pprd.op.itcp.cloud.vt.edu

- Traefik Dashboard: https://traefik.pprd.op.itcp.cloud.vt.edu

- Cluster base name:

PROD cluster#

- Purpose: Running of all production tenant workloads including their various tiers namespaced separately.

- Access permissions: All platform admins have read access by default. Temporary write-access can be granted by putting a user into the correct ED group.

- Links to know:

- Cluster base name:

prod.op.itcp.cloud.vt.edu - Alertmanager: https://alertmanager.prod.op.itcp.cloud.vt.edu

- Grafana: https://grafana.prod.op.itcp.cloud.vt.edu

- Headlamp: https://headlamp.prod.op.itcp.cloud.vt.edu/

- Kubecost: https://kubecost.prod.op.itcp.cloud.vt.edu

- Prometheus: https://prometheus.prod.op.itcp.cloud.vt.edu

- Traefik Dashboard: https://traefik.prod.op.itcp.cloud.vt.edu

- Cluster base name:

Accessing the clusters through platform-gitlab.cc.vt.edu#

On-prem clusters are provisioned and managed through platform-gitlab.cc.vt.edu.

- SSH to platform-gitlab.cc.vt.edu.

- Switch to the gitlab-runner user and enter your JWT token or PID password and 2FA

- Cluster configurations are kept at

/apps/eksa/[dvlp|pprd|prod]/[dvlp|pprd|prod]-workload/[dvlp|pprd|prod]-workload-eks-a-cluster.kubeconfig. For example, to load the kubeconfig for the DVLP cluster: